The robots.txt file can bring life – or death – to a website’s SEO. Here at periscopeUP, we perform audits on websites every day, and these files are a top priority. A single mistake in your robots file can stop search engines from ever accessing or looking at your website. Not everyone understands the robots file, and some even ignore it. In this technical post, we’ll cover the basics and some lesser known features that can be used in the robots.txt file.

The robots.txt file can bring life – or death – to a website’s SEO. Here at periscopeUP, we perform audits on websites every day, and these files are a top priority. A single mistake in your robots file can stop search engines from ever accessing or looking at your website. Not everyone understands the robots file, and some even ignore it. In this technical post, we’ll cover the basics and some lesser known features that can be used in the robots.txt file.

Technical issues? We can help. Contact our experts.

Allowing Access/Indexing

If you want Google to crawl and index your website, it should have a robots.txt file.

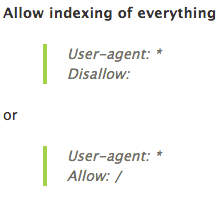

First, we have the user-agent (webcrawler/robot) with a wildcard character. This means that the following lines should be used for all user-agents out there trying to access the website.

User-agents can be any number of servers hitting your website. Some examples are Googlebot, Bingbot, Rogerbot, deepcrawl, etc. Here’s a list of all Google’s bots. Every service that offers to crawl or scan your website is using a user-agent name and can be blocked using your robots.txt file.

Second, we have the Allow/Disallow code which tells the user-agent what it can and cannot crawl/access. As long as you have this file and some form of allow/disallow, it helps control crawling, indexation, and can contribute to overall SEO ranking.

Blocking Folders, Content and Bots

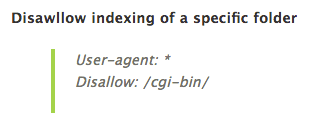

The next step is to determine which sections, folders, file types, or even individual files should not be accessed. Since you started by allowing robots to crawl everything, you must ensure that is really what should happen on your particular website and system. Every server has folders that may or may not need to be crawled or looked at. For example most cPanel shared servers have the “/cgi-bin/” folder which should not be crawled or accessed. This folder holds server level data and nothing pertaining to actual website content. For this example, you would place this line of code below the Allow line:

The robots file can also be used to block file types or individual files from being crawled. There is also a way to block all content in a folder and allow access to a single file inside that folder.

Some websites go further and specify that certain bots are not allowed to access the entire site. This may prevent competitors from snooping around. Still, this can cause issues when you’re doing your own analysis and crawl using tools available on the web. If you implement this bot ban, comment out those lines while you run your crawls. Once you have completed your analysis, you can bring those lines of code back to an active state.

Blocking Website Resources – CSS & JS

Blocking resources such as CSS and JS files can cause indexation and render issues. Years ago it was common practice to block these files to prevent bots from indexing the files in search results or to hide blackhat SEO tactics. In the last 5 years, we’ve seen a shift to allowing bots to access these files. This lets them determine how to render and access your website on different devices. Another reason to give bots access to these files is to allow them to understand your website as a whole instead of just text.

If you block assets or resource folders that include your JS and CSS website files, you hinder proper crawling and indexing of your entire site. Remove those lines from your robots file or explicitly allow the bots to have access to those areas and files.

Specifying A User-Agent

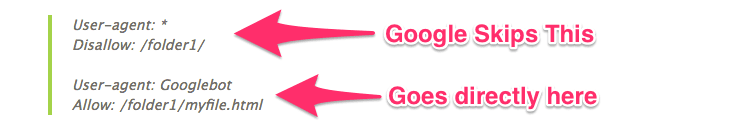

If you specifically add a bot to the user-agent line instead of using a wildcard, the bot will only follow what you have placed under that area and will ignore all wildcard or other code. This is important if you want to block a folder from Google but allow Google to see a single file.

In this example Google skipped over the first user-agent because Googlebot is specified in the second section. Google now has access to the entire folder where the file lives since the Disallow from the first section is not under Googlebot section.

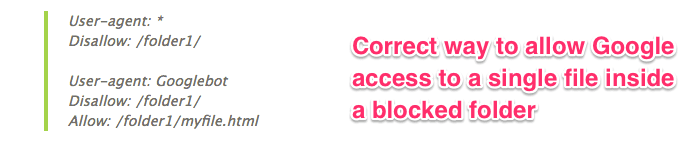

The second screenshot is the correct way to markup the robots file to allow access to the single file and not let Googlebot access the entire folder.

This is why you should always test your robots file for each bot using a robots file tester. Google offers a tester inside Google Search Console and will pull down your existing robots file for you. It only allows you to check using one of their bots. Still, this is a good starting point to ensure Google has the correct access.

Note: Just because you disallow a bot from accessing an area of your website doesn’t mean it won’t be indexed. This common misconception can cause sections of your website that should not be indexed to show up in results. The only way to officially not index a web page is to add a meta robots tag in the <head> section stating noindex.

Unofficial NOINDEX

There is an unofficial line of code you can use for Googlebot that will actually de-index or not index a folder, file, or URL inside the robots file. Be careful though, Google could stop supporting this at any time. Still, it’s worth noting if you’re struggling to get a page or file out of the index in Google. If you use “Noindex:” in a line followed by a URL or folder, Google will not index that page or folder. This could be a short term fix while you’re adding a header tag or x-meta-robots tag for your files.

The robots file is one of the most critical and powerful files you can have on your website. If you have issues with indexation or crawling, this is a great place to start.

Need help with robots file or indexation issues? Reach out to our experts.